Three Design Questions the LlamaIndex Article Answers

Jerry Liu’s “Does MCP Kill Vector Search?” article published on LlamaIndex Blog answers three critical design questions that every AI architect should ask when evaluating federated MCP versus traditional RAG pipelines.

🔍 1. When can you safely punt structured queries to federated MCP, and when do you still need a unified index?

- Federated MCP wins when…

- You need live data—“How many open high-priority Jira tickets?” returns the current count, not last night’s snapshot.

- You require actionable APIs—“Create a new Salesforce opportunity” or “Post to Slack” can’t come from an index; they need two-way tool endpoints.

- You trust each service’s native ranking for source-specific relevance—e.g. Salesforce’s own “top deals” sorting.

- Unified indexing wins when…

- Your query spans multiple domains—e.g. “What customer issues mention our new mobile feature?” needs tickets (Zendesk), docs (Confluence), and call transcripts (Drive).

- You need a single, coherent ranking—vector similarity across all text is far better than stitching together five separate keyword-ranked lists.

- You’re working with legacy or unstructured content—scanned PDFs, slide decks, or spreadsheets require preprocessing, chunking, and embedding before any API exists.

Example:

• A “live KPI dashboard” agent should call the ERP MCP server for up-to-the-minute numbers.

• A “competitive intelligence” agent should first vector-search last quarter’s slide decks and then, once it finds a relevant deck, call an MCP document-QA tool for granular extraction.

⚡️ 2. What performance and UX trade-offs arise between federated calls vs. centralized RAG?

| Aspect | Federated MCP | Centralized RAG |

|---|---|---|

| Latency | Dependent on slowest API among N calls; can spike under load. | One fast vector lookup + embed fetch; predictable. |

| Throughput | Harder to batch; each MCP call is bespoke. | Vector stores handle thousands of queries/sec. |

| Consistency | Each endpoint has its own index freshness and ranking model. | Single index ensures uniform freshness window. |

| Complexity | Client must orchestrate parallel calls, timeouts, retries. | Client sends one query; orchestrator handles multi-source ingestion. |

Real-world scenario:

Asking “What complaints did mobile users file last month?” via federated MCP hits Zendesk, Salesforce, and Confluence APIs in parallel—one laggard stalls the entire answer. A RAG first fetches top N complaint passages from a pre-built vector index, then optionally makes MCP calls on the top candidates for live detail.

🧩 3. How do you architect a hybrid pipeline that leverages the strengths of both?

- Fast-Scan Retrieval Layer

- Component: Vector store (e.g. Pinecone, Weaviate) with embeddings over chunked documents and transcribed text.

- Role: Quickly surface the top K semantically relevant contexts from across all unstructured sources.

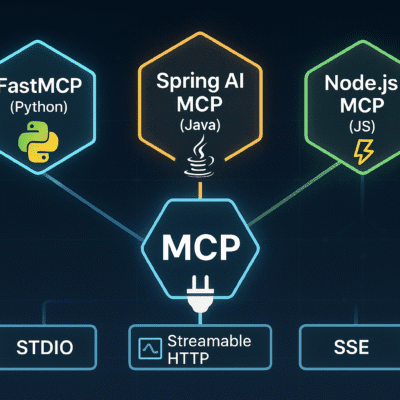

- Federated MCP Orchestration Layer

- Component: MCP client orchestrator (serverless or microservice) that holds the vector results and routes sub-queries to appropriate MCP servers (Jira, Salesforce, custom doc-QA).

- Role: Enrich or validate the vector hits with live or structured data; optionally take actions.

- Aggregation & Ranking Module

- Component: Combine vector-derived snippets and federated MCP responses into a unified candidate set.

- Role: Apply a re-ranking model (e.g. cross-encoder) or simple heuristics to surface the best final results.

- Action Executor

- Component: MCP “toolbox” with write-back capabilities.

- Role: Perform user-requested actions (e.g., “update ticket status”), with user confirmation flows for sensitive operations.

Architectural flow:

- LLM → “Find docs on X” → Vector store → top 5 chunks.

- For each chunk tagged

{source: “jira”}, call MCP’sread_issues→ get live issue details.- Merge chunk text + issue fields → re-rank → present top answer.

- If user says “Close those tickets,” trigger MCP’s

close_issuetool with confirmation.

The bottom line

Neither federated MCP nor vector search is a silver bullet—each excels in different domains. The optimal AI agent architecture is a hybrid:

- Use vector-powered RAG as your high-throughput semantic filter over unstructured content.

- Plug in federated MCP for real-time, structured data and actionable APIs.

- Orchestrate intelligently: fetch broadly and fast, then drill down precisely where live data or stateful operations are needed.

- Re-rank and aggregate to deliver a consistent, relevance-optimized user experience.

By embracing this layered approach, you get the freshness and interactivity of MCP with the scale and semantic power of vector retrieval—delivering agents that are both knowledgeable and capable.